How does the brain extract meaning from visual information in the world?

This understanding could lead to artificial intelligence systems that are more compatible with human perception, and to directions for treating brain functions.

It’s now a particularly exciting time to advance such questions, due to progress in machine learning, and the availability of experimental neuroscience and psychology data. Therefore, we are building computer models of neural processing in the brain, and collaborating with experimental groups.

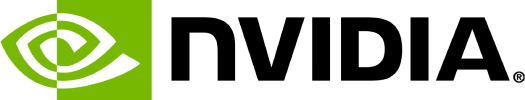

When I entered the field, I was intrigued by the hypothesis that visual images and movies (and sounds) have predictable and quantifiable statistical regularities to which the brain is sensitive. Both our neurons and perception are influenced by what surrounds a given point spatially in the image and by what has been observed in the past. Based on these principles, we developed models that have enriched our understanding of a nonlinear computation that is ubiquitous in the brain, known as normalization.

(Based on Coen-Cagli et al. Nature Neuroscience 2015; Coen-Cagli et al. PloS Computational Biology 2012)

(Based on Coen-Cagli et al. Nature Neuroscience 2015; Coen-Cagli et al. PloS Computational Biology 2012)

Using our approach, we have pushed the notion of predicting how neurons in the brain primary visual cortex process natural images and tested this through experimental collaboration. In the past, both we and others have tested computational models against brain data, but often with simpler visual stimuli such as bars of light in the center and surround locations. We showed that for natural images, surround suppression was flexible and recruited only when the image was inferred to have a similar statistical structure in the center and surround locations.

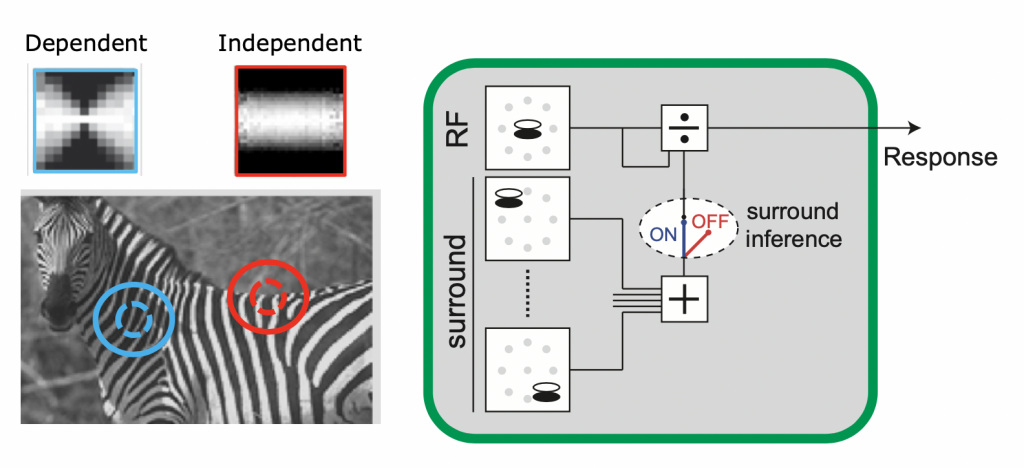

We also showed that a popular visual illusion can be explained using this principle, offering a window into how the brain perceives visual information.

(Based on Schwartz et al., Journal of Vision 2009)

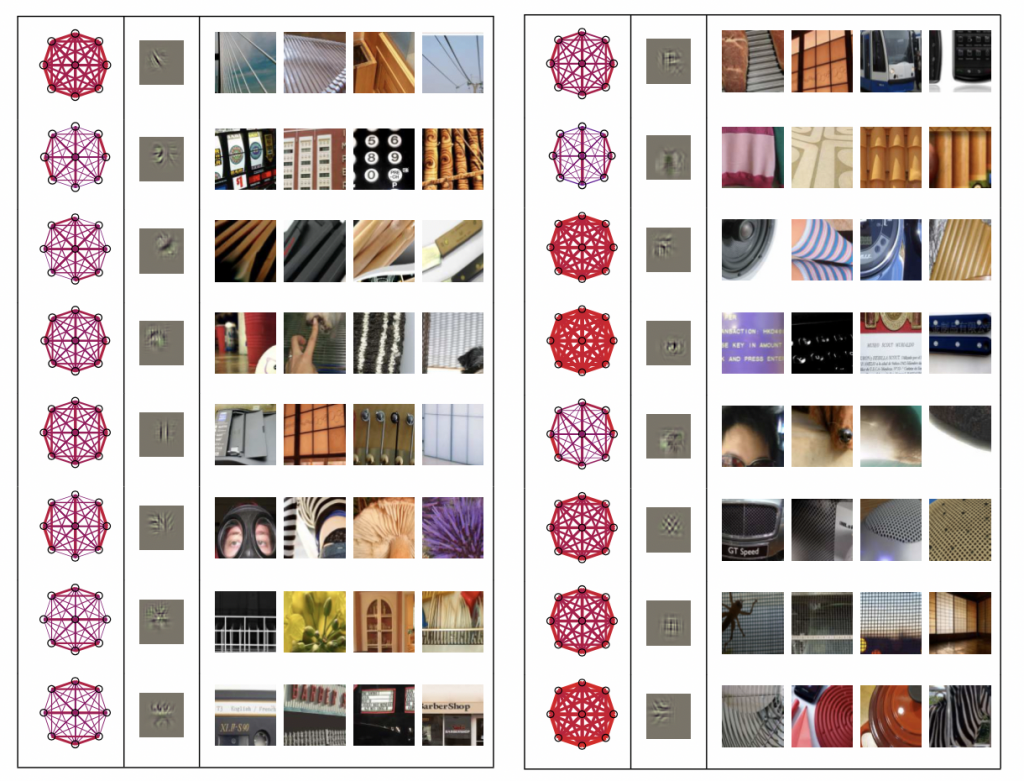

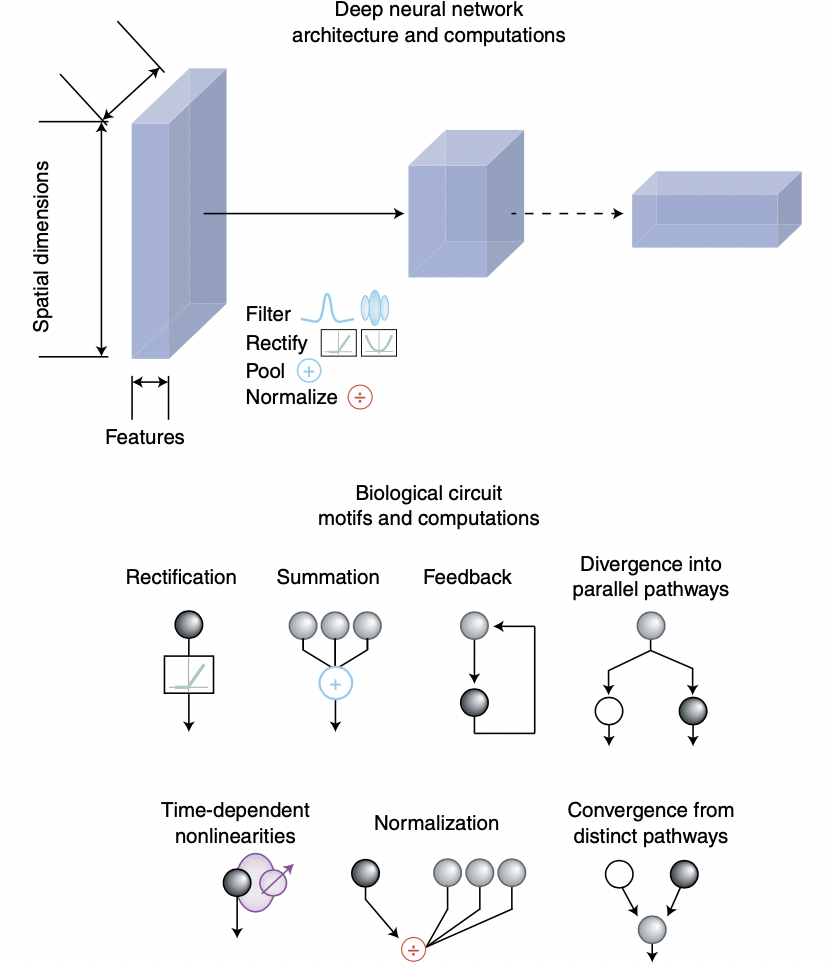

While building models of higher cortical areas has been an obstacle in the past, recent advances in deep learning have opened up exciting opportunities. We are currently particularly interested in understanding when nonlinearities are recruited in intermediate cortical areas of the brain that have access to more complex structure in the image than primary visual cortex. To this end, we are incorporating more biologically-inspired computations into deep neural networks, including flexible normalization models we have developed for the primary visual cortex.

(From Sanchez-Giraldo and Schwartz, Neural Computation, accepted 2019)

(From Sanchez-Giraldo and Schwartz, Neural Computation, accepted 2019)

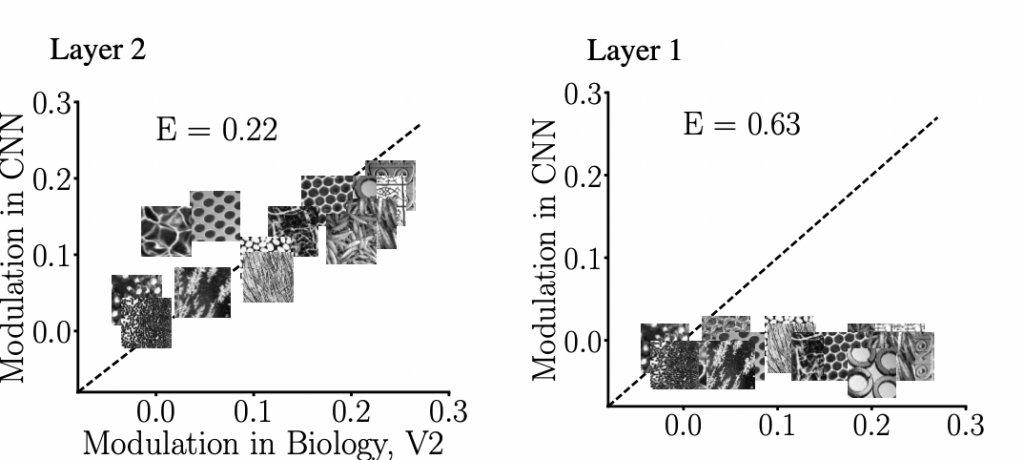

We are also developing approaches for quantifying how well deep learning models account for neural data on texture sensitivity in the secondary visual cortex.

(From Laskar et al., Journal of Vision 2020; data from Freeman et al., Nature Neuroscience 2013)

Deep learning has dramatically advanced artificial systems in tasks such as object recognition. But they also have failure modes in which they behave differently from human perception. By incorporating more biologically realistic computations into deep learning models, we expect our approaches will lead to better artificial systems, that can ultimately generalize better across stimuli and tasks.

(From Turner et al., Nature Neuroscience 2019)

Beyond the visual system, our work has spanned the auditory and motor systems. More recently, we have also been part of a collaborative team at the University of Miami, focused on predicting the effectiveness of treatments for Traumatic Brain Injury using machine learning approaches.